Tag: multitenant

Efficient test database refresh using Data Guard Snapshot Standby, Multitenant and storage snapshots

- Written by: ilmarkerm

- Category: Blog entry

- Published: March 23, 2024

There are many different ways to refresh test database with production data. Here I’m going to describe another one, that might be more on the complex side, but the basic requirements I had are:

- Data must be cleaned before opening it up for testing. Cleaning process is extensive and usually takes a few days to run.

- Switching testing from old copy to a new copy must be relatively fast. Also if problems are discovered, we should be able to switch back to the old copy relatively fast.

- Databases are large, hundreds of terabytes, so any kind of data copy over network (like PDB cloning over database link) would take unacceptable amount of time and resources.

- There is no network connection between test database network and production database network, so PDB cloning over database link is not possible anyway.

- The system should work also with databases that have a Data Guard standby with Fast-Start Failover configured.

Any kind of copying the data will not work, since the databases are really large – so some kind of storage level snapshot is needed – and we can use a modern NAS system (that databases utilise over NFS) that can do snapshots and thin clones. Although not in multiple layers.

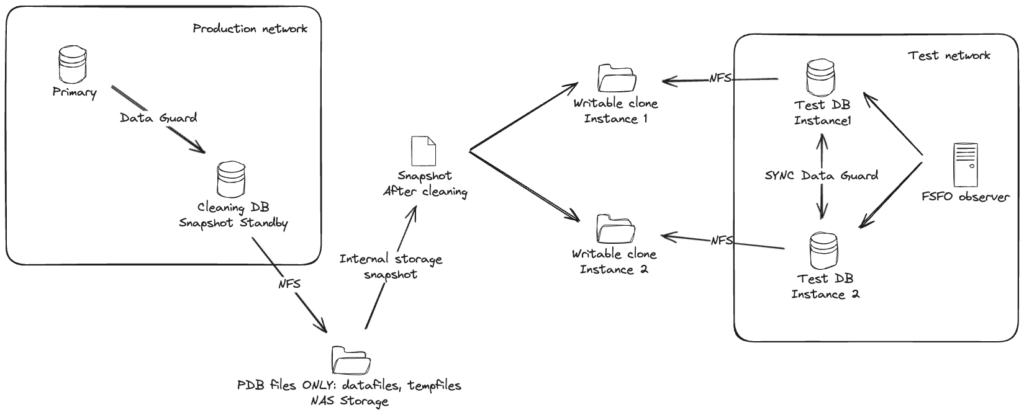

Here is one attempt to draw out the system. With a more complicated case where the test database actually has two database instances, in Data Guard Fast-Start Failover mode.

Basically:

- There is a special Data Guard standby for the cleaning process. This standby is using an external NAS storage for storing all PDB datafiles and tempfiles. No CDB files, like CDB datafiles, online logs, standby logs or recovery area should be stored in that NAS folder – keep CDB files separate.

- To start the cleaning process, put the cleaning standby in Snapshot Standby mode (instructions are here) and run all cleaning processes and scripts on the snapshot standby. After cleaning is finished successfully, create PDB metadata XML files and shut down the snapshot standby.

- Create storage level snapshot of the NAS folder storing the PDB files.

- Restore Snapshot Standby back to being a normal physical standby.

- Create writable clone from the same storage snapshot for the test database. If the test database consists of more Data Guard databases, then create a clone (from the same snapshot) for each one of them and mount them to the test database hosts.

- On the test database create a new pluggable database as clone (using NOCOPY to avoid any data movement). In case of Data Guard, do not forget to set standby_pdb_source_file_directory so the standby database would know where to find the data files of the plugged in database.

Done. Currently both old and new copy are attached to the same test CDB as independent PDBs, when you are happy with the new copy, just direct testing traffic to the new PDB. Then old one is not needed, drop it and clean up the filesystems.

Sounds maybe too complex, but the whole point is to avoid moving around large amounts of data. And key part of support rapid switching to the new copy and supporting Data Guard setups (on the test environment side) is keeping the test database CDB intact.

The steps

I have a post about using Snapshot Standby, you can read it here. All the code below is for the experts…

The preparation

Big part of the system is preparing the Cleaning DB that will be used for Snapshot Database.

Here I use the following paths:

- /oradata – Location for standby database CDB files, online logs, standby logs, recovery area

- /nfs/pdb – NFS folder from external NAS, that will ONLY store all PDB datafiles and tempfiles

# Parameters

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

db_create_file_dest string /nfs/pdb

db_create_online_log_dest_1 string /oradata

db_recovery_file_dest string /oradata

db_recovery_file_dest_size big integer 10T

# Datafiles

CON_ID NAME

---------- --------------------------------------------------------------------------------------------------------------------------

1 /oradata/PROD1MT101CL/datafile/o1_mf_audit_tb__0d68332o_.dbf

1 /oradata/PROD1MT101CL/datafile/o1_mf_sysaux__0d5fjj5y_.dbf

1 /oradata/PROD1MT101CL/datafile/o1_mf_system__0d4zdst4_.dbf

1 /oradata/PROD1MT101CL/datafile/o1_mf_undotbs1__0d5yw58n_.dbf

1 /oradata/PROD1MT101CL/datafile/o1_mf_users__0d65hljg_.dbf

2 /oradata/PROD1MT101CL/C24DD111D3215498E0536105500AAC63/datafile/data_D-WI93OP21_I-1717445842_TS-SYSAUX_FNO-4_q92k3val

2 /oradata/PROD1MT101CL/C24DD111D3215498E0536105500AAC63/datafile/data_D-WI93OP21_I-1717445842_TS-SYSTEM_FNO-2_p32k3v89

2 /oradata/PROD1MT101CL/C24DD111D3215498E0536105500AAC63/datafile/data_D-WI93OP21_I-1717445842_TS-UNDOTBS1_FNO-6_q22k3vag

3 /nfs/pdb/PROD1MT101CL/CC1BE00B92E672FFE053F905500A1BAE/datafile/o1_mf_audit_tb__0fbbwglb_.dbf

4 /nfs/pdb/PROD1MT101CL/C2811CAFC29D1DC9E053F905500A7EC9/datafile/o1_mf_audit_tb__0fk2wkxy_.dbf

4 /nfs/pdb/PROD1MT101CL/C2811CAFC29D1DC9E053F905500A7EC9/datafile/o1_mf_users__0fj4hm5h_.dbf

5 /nfs/pdb/PROD1MT101CL/CF6A94E61EF92940E053F905500AFB8E/datafile/o1_mf_audit_tb__0fsslsvv_.dbf

5 /nfs/pdb/PROD1MT101CL/CF6A94E61EF92940E053F905500AFB8E/datafile/o1_mf_users__0frm1yg5_.dbf

6 /nfs/pdb/PROD1MT101CL/CF6A94E61F3E2940E053F905500AFB8E/datafile/o1_mf_audit_tb__0fz8nq60_.dbf

6 /nfs/pdb/PROD1MT101CL/CF6A94E61F3E2940E053F905500AFB8E/datafile/o1_mf_users__0fy4j81x_.dbf

7 /nfs/pdb/PROD1MT101CL/CF6A94E61F832940E053F905500AFB8E/datafile/o1_mf_audit_tb__0f46dfvs_.dbf

7 /nfs/pdb/PROD1MT101CL/CF6A94E61F832940E053F905500AFB8E/datafile/o1_mf_users__0f3cn007_.dbf

8 /nfs/pdb/PROD1MT101CL/CF6A94E61FC82940E053F905500AFB8E/datafile/o1_mf_audit_tb__0g5h842b_.dbf

8 /nfs/pdb/PROD1MT101CL/CF6A94E61FC82940E053F905500AFB8E/datafile/o1_mf_users__0g2v64l9_.dbf

9 /nfs/pdb/PROD1MT101CL/05126406D61853B3E0635005500AA12B/datafile/o1_mf_audit_tb__0g9rsqpo_.dbf

9 /nfs/pdb/PROD1MT101CL/05126406D61853B3E0635005500AA12B/datafile/o1_mf_inmemory__0g8yrdvh_.dbf

# Tempfiles

CON_ID NAME

---------- -------------------------------------------------------------------------------------------------

1 /oradata/PROD1MT101CL/datafile/o1_mf_temp__0d35c01p_.tmp

2 /oradata/PROD1MT101CL/C24DD111D3215498E0536105500AAC63/datafile/o1_mf_temp__0d36q7dx_.tmp

3 /nfs/pdb/PROD1MT101CL/CC1BE00B92E672FFE053F905500A1BAE/datafile/o1_mf_temp__0gr02w4t_.tmp

3 /nfs/pdb/PROD1MT101CL/CC1BE00B92E672FFE053F905500A1BAE/datafile/o1_mf_temp__0gr0j0wr_.tmp

3 /nfs/pdb/PROD1MT101CL/CC1BE00B92E672FFE053F905500A1BAE/datafile/o1_mf_temp_dba__0gqzydts_.tmp

4 /nfs/pdb/PROD1MT101CL/C2811CAFC29D1DC9E053F905500A7EC9/datafile/o1_mf_temp__0grhwybg_.tmp

4 /nfs/pdb/PROD1MT101CL/C2811CAFC29D1DC9E053F905500A7EC9/datafile/o1_mf_temp__0grjcvk4_.tmp

4 /nfs/pdb/PROD1MT101CL/C2811CAFC29D1DC9E053F905500A7EC9/datafile/o1_mf_temp_dba__0h6slfch_.tmp

5 /nfs/pdb/PROD1MT101CL/CF6A94E61EF92940E053F905500AFB8E/datafile/o1_mf_temp__0gokwd9n_.tmp

5 /nfs/pdb/PROD1MT101CL/CF6A94E61EF92940E053F905500AFB8E/datafile/o1_mf_temp__0gol9jc6_.tmp

5 /nfs/pdb/PROD1MT101CL/CF6A94E61EF92940E053F905500AFB8E/datafile/o1_mf_temp_dba__0gokr9ng_.tmp

6 /nfs/pdb/PROD1MT101CL/CF6A94E61F3E2940E053F905500AFB8E/datafile/o1_mf_temp__0gnrh7x8_.tmp

6 /nfs/pdb/PROD1MT101CL/CF6A94E61F3E2940E053F905500AFB8E/datafile/o1_mf_temp__0gnrw0dj_.tmp

6 /nfs/pdb/PROD1MT101CL/CF6A94E61F3E2940E053F905500AFB8E/datafile/o1_mf_temp_dba__0gnrb2gg_.tmp

7 /nfs/pdb/PROD1MT101CL/CF6A94E61F832940E053F905500AFB8E/datafile/o1_mf_temp__0gmw72co_.tmp

7 /nfs/pdb/PROD1MT101CL/CF6A94E61F832940E053F905500AFB8E/datafile/o1_mf_temp__0gmwnqsp_.tmp

7 /nfs/pdb/PROD1MT101CL/CF6A94E61F832940E053F905500AFB8E/datafile/o1_mf_temp_dba__0gmw1xm3_.tmp

8 /nfs/pdb/PROD1MT101CL/CF6A94E61FC82940E053F905500AFB8E/datafile/o1_mf_temp__0gm118wt_.tmp

8 /nfs/pdb/PROD1MT101CL/CF6A94E61FC82940E053F905500AFB8E/datafile/o1_mf_temp__0gm1dv5n_.tmp

8 /nfs/pdb/PROD1MT101CL/CF6A94E61FC82940E053F905500AFB8E/datafile/o1_mf_temp_dba__0gm0wzcc_.tmp

9 /nfs/pdb/PROD1MT101CL/05126406D61853B3E0635005500AA12B/datafile/o1_mf_temp__0gj66tgb_.tmp

9 /nfs/pdb/PROD1MT101CL/05126406D61853B3E0635005500AA12B/datafile/o1_mf_temp_dba__0gh7wbpf_.tmp

# Logs

MEMBER

--------------------------------------------------------

/oradata/PROD1MT101CL/onlinelog/o1_mf_1__0b0frl6b_.log

/oradata/PROD1MT101CL/onlinelog/o1_mf_2__0b1qco5j_.log

/oradata/PROD1MT101CL/onlinelog/o1_mf_3__0b2bd0od_.log

/oradata/PROD1MT101CL/onlinelog/o1_mf_4__0b2z3qvo_.log

/oradata/PROD1MT101CL/onlinelog/o1_mf_5__0b3tkddo_.log

/oradata/PROD1MT101CL/onlinelog/o1_mf_6__0b4gvg2h_.log

/oradata/PROD1MT101CL/onlinelog/o1_mf_7__0b51zbqp_.logCleaning

# Convert the special Data Guard standby database to a snapshot standby

DGMGRL> convert database prod1mt101cl to snapshot standby;

# Now run all your cleaning scripts on this special database

SQL> @all_of_my_special_cleaning_scripts

# Cleaning is now finished successfully

# Close and reopen each PDB in READ ONLY mode

SQL> alter pluggable database all close;

SQL> alter pluggable database all open read only;

# Create XML metadata file for each PDB

SQL> exec DBMS_PDB.DESCRIBE('/nfs/pdb/apppdb1.xml','APPPDB1');

SQL> exec DBMS_PDB.DESCRIBE('/nfs/pdb/apppdb2.xml','APPPDB2');

SQL> exec DBMS_PDB.DESCRIBE('/nfs/pdb/apppdb3.xml','APPPDB3');

# Shut down the cleaning database

SQL> shutdown immediateSnapshot

Now use your NAS toolkit (REST API for example) to create a storage level snapshot from the /nfs/pdb filesystem.

Convert Snapshot Standby back to physical standby

Use the instructions in this post to restore the cleaning instance back to being a physical standby.

Attach PDBs to test database CDB

First, use your NAS toolkit to create a thin writable from from the snapshot created earlier (or multiple clones if the test setup has a Data Guard standby, a dedicated copy for each) and mount them to the test database hosts as /nfs/newclone.

# If the test setup has Data Guard, set standby_pdb_source_file_directory

alter system set standby_pdb_source_file_directory='/nfs/newclone/PROD1MT101CL/CC1BE00B92E672FFE053F905500A1BAE/datafile/' scope=memory;

# On test primary create new pluggable database as clone

create pluggable database apppdb1_newclone as clone using '/nfs/newclone/apppdb1.xml' source_file_directory='/nfs/newclone/PROD1MT101CL/CC1BE00B92E672FFE053F905500A1BAE/datafile/' nocopy tempfile reuse;

# Open it

alter pluggable database apppdb1_newclone;Done.

- Written by: ilmarkerm

- Category: Blog entry

- Published: July 9, 2016

Lately I’ve been upgrading our 11g Standard Edition databases in test environments to 12c Enterprise Edition and also plugging them in to a multitenant container database.

It’s a new technology for Oracle, but I was still quite surprised about the number of issues I faced when trying to plug in an existing non-CDB database. After resolving all these issues it has been quite painless process since then.

In short, upgrading 11g database to 12c pluggable database involves the following steps:

* Upgrade 11g database to 12c using the normal database upgrade procedures. This step will result in 12c non-CDB database.

* In the target CDB database plug in the new upgraded database as a new pluggable database.

* Run $ORACLE_HOME/rdbms/admin/noncdb_to_pdb.sql in the new PDB. This step will convert the non-CDB data dictionary to a PDB data dictionary. After this step you can open the newly added PDB.

This post is mostly about the issues I encountered when running the last described step – executing $ORACLE_HOME/rdbms/admin/noncdb_to_pdb.sql. Hopefully it’ll be helpful if you hit similar problems.

Version: 12.1.0.2, 2-node RAC

Patches: April 2016 PSU + OJVM PSU

Platform: Oracle Linux 6 x86-64

noncdb_to_pdb.sql takes a really long time to execute

This was the first problem I encountered. After 1,5 hours I killed my session. That was really weird, because executing it should onbly take about 20 minutes according to Oracle documentation. The step script was stuck on was:

-- mark objects in our PDB as common if they exist as common in ROOT

Looking at the wait events the session was not waiting for a blocker, it was actively executing many parallel sessions.

I found the following blog post that described the same problem and the described solution also helped for me: Link to Bertrand Drouvot blog

But one addition, instead of modifying the noncdb_to_pdb.sql script, I executed ALTER SESSION before running noncdb_to_pdb.sql.

SQL> alter session set container=newpdb;

SQL> alter session set optimizer_adaptive_features=false;

SQL> $ORACLE_HOME/rdbms/admin/noncdb_to_pdb.sql

noncdb_to_pdb.sql hangs at alter pluggable database close

Next issue I faced – noncdb_to_pdb.sql just hanged mid-execution and the statement it was executing was

SQL> alter pluggable database "&pdbname" close;

The session was waiting for opishd.

Solution: Apply bugfix for Bug 20172151 – NONCDB_TO_PDB.SQL SCRIPT HANGS DURING UPGRADE. This will just update noncdb_to_pdb.sql script itself to execute alter pluggable database “&pdbname” close IMMEDIATE instances = all; instead of normal close.

noncdb_to_pdb.sql fails with ORA-600 [kspgsp2]

That was a fun one 🙂 Not every time, but most executions noncdb_to_pdb.sql failed almost at the end with the following message:

SQL> alter session set "_ORACLE_SCRIPT"=true;

Session altered.

SQL>

SQL> drop view sys.cdb$tables&pdbid;

drop view sys.cdb$tables5

*

ERROR at line 1:

ORA-00600: internal error code, arguments: [kspgsp2], [0xBF3C9E3F8], [2174],

[recyclebin], [], [], [], [], [], [], [], []

Every time at the same drop view statement. Search in Oracle support did not give me anything helpful, there were many ORA-600 [kspgsp2] issues, but nothing matched my case. Finally I noticed that one argumnt was [recyclebin] and decided to try turning the recyclebin off for the session. It helped.

Successful non-CDB to PDB conversion

Getting successful execution of noncdb_to_pdb.sql required me to:

* Apply patch 20172151

* Running noncdb_to_pdb.sql using the following sequence of commands:

SQL> alter session set container=newpdb;

SQL> alter session set optimizer_adaptive_features=false;

SQL> alter session set recyclebin=off;

SQL> @$ORACLE_HOME/rdbms/admin/noncdb_to_pdb.sql

Take care of the services!

This problem may be our environment specific, but I’ll describe it anyway.

We use services a lot, all applications that connect to the database get their own dedicated service. So the applications connect using JDBC connection string that looks something like this:

jdbc:oracle:thin:@//clusername.example.com/application.scrum.example.com

Where application is configured as a service using srvctl and scrum.example.com is database domain name depending on the environment. The same application in QA environment will have connection string:

jdbc:oracle:thin:@//clusername.example.com/application.qa.example.com

We decided to use only one CDB for all environments, but db_domain parameter cannot be different for each PDB. In order to not change the application connection strings I had to create the new services in srvctl using the FULL service name, then Oracle will not append the database domain name to the service name:

srvctl add service -database cdb -preferred cdb1,cdb2 -pdb newpdb -service application.scrum.example.com

srvctl start service -database cdb -service application.scrum.example.com

After adding the services for the first database all of them started just fine and applications connected just fine, but when starting the services for the second environment (qa) I got the following error:

srvctl add service -database cdb -preferred cdb1,cdb2 -pdb newpdbqa -service application.qa.example.com

srvctl start service -database cdb -service application.qa.example.com

...

ORA-44311: service application not running

ORA-06512: at "SYS.DBMS_SYS_ERROR", line 86

ORA-06512: at "SYS.DBMS_SERVICE_ERR", line 40

ORA-06512: at "SYS.DBMS_SERVICE", line 421

ORA-06512: at line 1

...

But when I tried to add a new service that did not exist previously it started just fine. I started digging into services on CDB level and found that all imported PDB-s also imprted their old short name services to CDB:

SQL> alter session set container=cdb$root;

Session altered.

SQL> select name from cdb_services order by 1;

NAME

------------------------------------------------

SYS$BACKGROUND

SYS$USERS

...

application

application

application.scrum.example.com

application2

application2

application2.scrum.example.com

...

I just assumed it can be confusing for CDB if different PDB-s have conflicting services running and I manually went into each PDB and removed the old short service names.

SQL> alter session set container=newpdb;

SQL> exec dbms_service.delete_service('application');

SQL> exec dbms_service.delete_service('application2');

SQL> alter session set container=newpdbqa;

SQL> exec dbms_service.delete_service('application');

SQL> exec dbms_service.delete_service('application2');

After that new services started just fine.

SQL> alter session set container=cdb$root;

SQL> select name from cdb_services order by 1;

NAME

------------------------------------------------

SYS$BACKGROUND

SYS$USERS

...

application.scrum.example.com

application2.scrum.example.com

application.qa.example.com

application2.qa.example.com

...